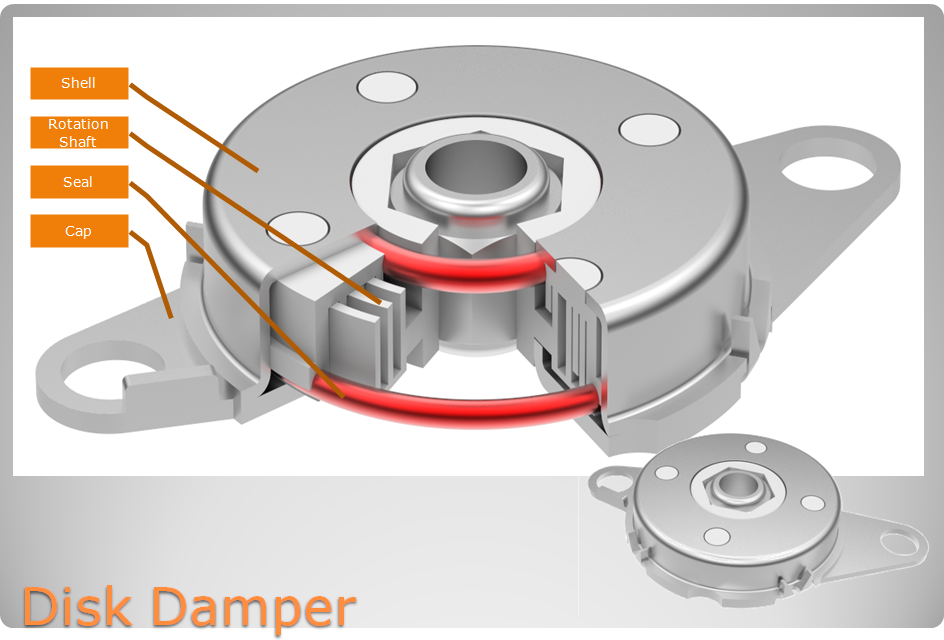

Flat disk Rotary Damper mainly used for large diameter, small height space. Disk dampers provide energy absorption and rotational deceleration. We offer many different models from mild to extreme. Our disk dampers are the perfect solution for a wide range of applications, from scanner, and glove boxes to auditorium seating to. Disk dampers are designed to control and smooth out the opening and closing of lids, and doors.

Our damper is

conducive to performing structural movement in soft, silent and safe

environment, mitigating impact load, avoiding strike damage, prolonging

mechanical life, reducing noise disturbance, improving product quality and

improve customer satisfaction.

NOTE:

1. Please contact the corresponding product engineer for specific torque products.

2. Max. rotation speed: 50r/min

3. Max. circle rate: 6 cycle/min ( Clockwise360 °, 360 ° anti-clockwise for 1 cycle)

4. Operating temperature: -10~50℃

5. Storage temperature:-30~80℃

NO.

Description

Material

1

Shell

SPFC

2

Cover

SPFC

3

Shaft

PA/POM

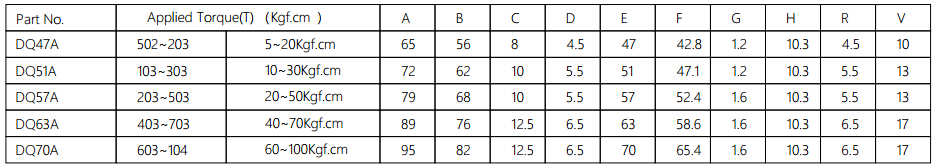

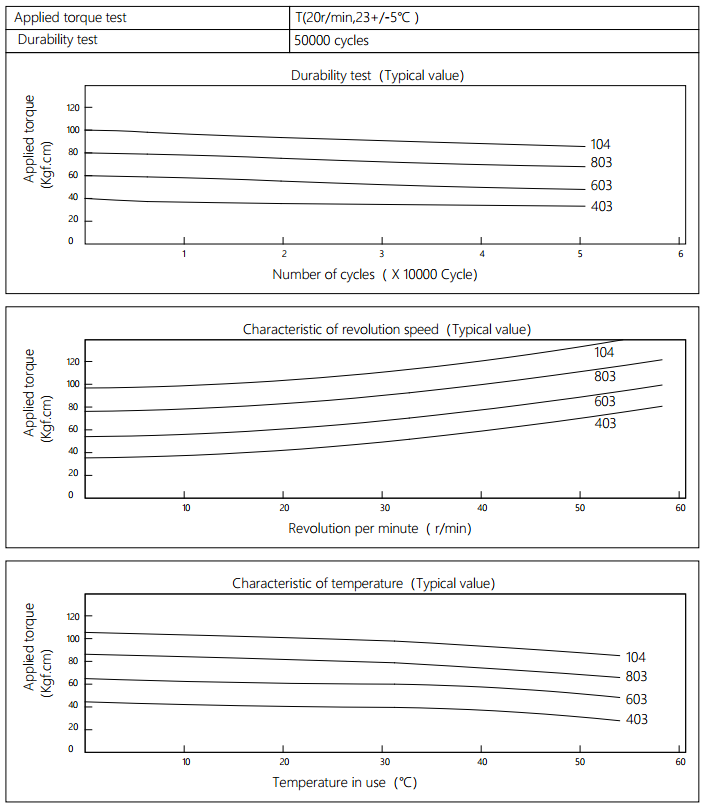

Applied torque:(T)

Test Temperature: 23+/-5℃

Rotating speed: 20r/min

Durability test Metho: Clockwise 360 °, 360 °anti-clockwise

Rotating speed: 20r/min

Test Frequency: (1cycle/min)

Test Temperature: 23±5℃

Durability test cycle: 50000 cycle

Test result criteria: Store in the room temperature for 24 hours or more after the test, recording to the torque T=T±30%T.

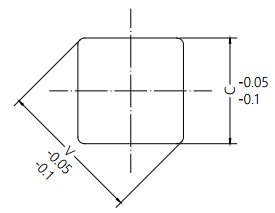

The damper square hole coordinateswith the rotation axis dimension tolerance.

Disk Damper,Adjustable Dampers,Excavator Disk Damper,Spare Disk Damper,Oval Disk Damper

Shenzhen ABD Equipment Co., Ltd. , https://www.abddamper.com